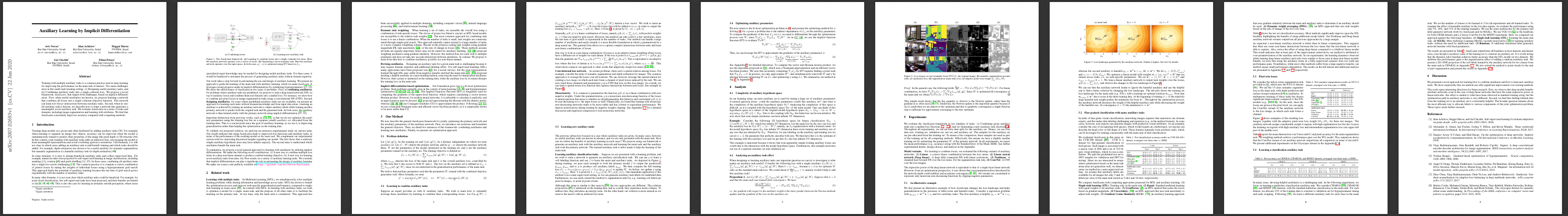

Training with multiple auxiliary tasks is a common practice used in deep learning for improving the performance on the main task of interest. Two main challenges arise in this multi-task learning setting: (i) Designing useful auxiliary tasks; and (ii) Combining auxiliary tasks into a single coherent loss. We propose a novel framework, AuxiLearn, that targets both challenges, based on implicit differentiation. First, when useful auxiliaries are known, we propose learning a network that combines all losses into a single coherent objective function. This network can learn non-linear interactions between auxiliary tasks. Second, when no useful auxiliary task is known, we describe how to learn a network that generates a meaningful, novel auxiliary task. We evaluate AuxiLearn in a series of tasks and domains, including image segmentation and learning with attributes. We find that AuxiLearn consistently improves accuracy compared with competing methods.

Unified framework for auxiliary learning

In this work, we take a step towards automating the use and design of auxiliary learning. We present an approach to guide the learning of the main task with auxiliary learning, which we name AuxiLearn. It leverages recent progress made in implicit differentiation for optimizing hyperparameters. We show the effectiveness of AuxiLearn in two types of problems. First, in combining auxiliaries, for problems where auxiliary tasks are predefined, we propose to train a deep neural network (NN) on top of auxiliary losses and combine them non-linearly into a unified loss. For instance, we show how to combine per-pixel losses in image segmentation tasks using a convolutional NN (CNN). Second, designing auxiliaries, for cases where predefined auxiliary tasks are not available, we present an approach for learning such tasks without domain knowledge and from input data alone.

Nonliear loss combinations

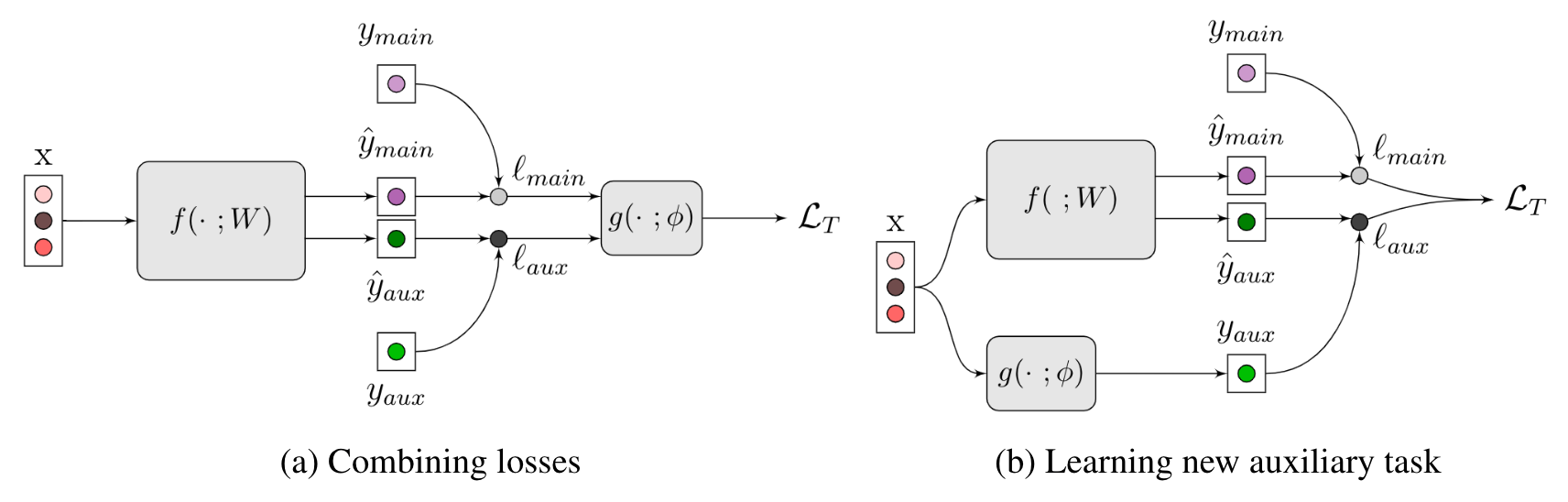

An illustrative example

Learning with many auxiliaries

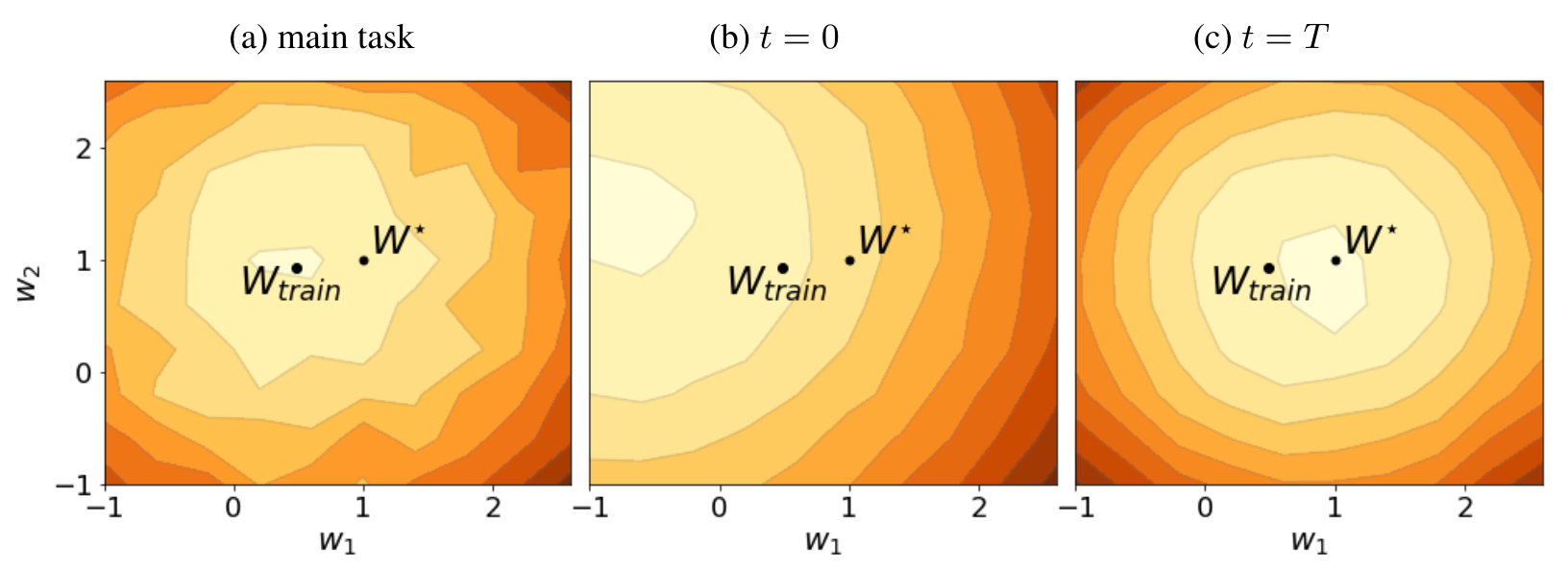

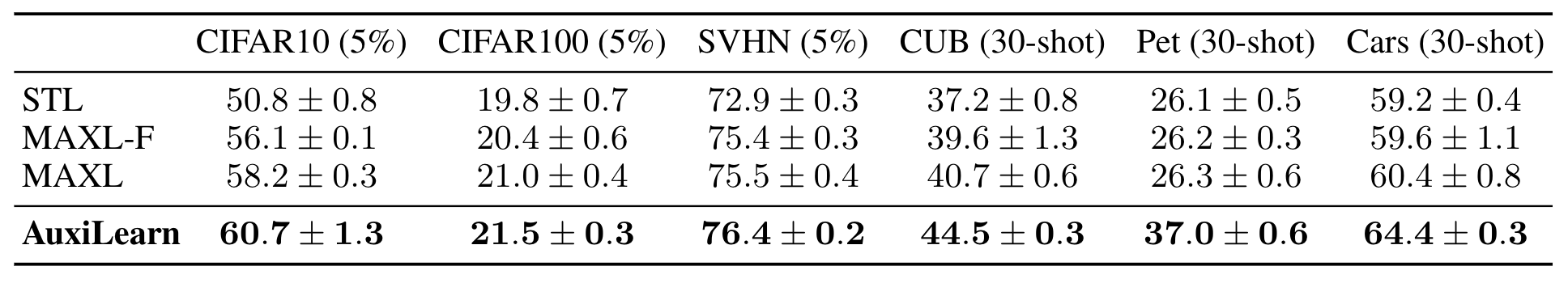

We evaluated AuxiLearn on the task of fine-grained classification of bird species (CUB-200 dataset) using 200 auxiliary tasks. Each image is associated with a specie (one of 200) and a set of 312 binary visual attributes, which we use as auxiliaries. Here we focus on a semi-supervised setting, in which auxiliary labels are available for all images but only 5 and 10 labels per class of the main task (noted as 5-shot and 10-shot, respectively).

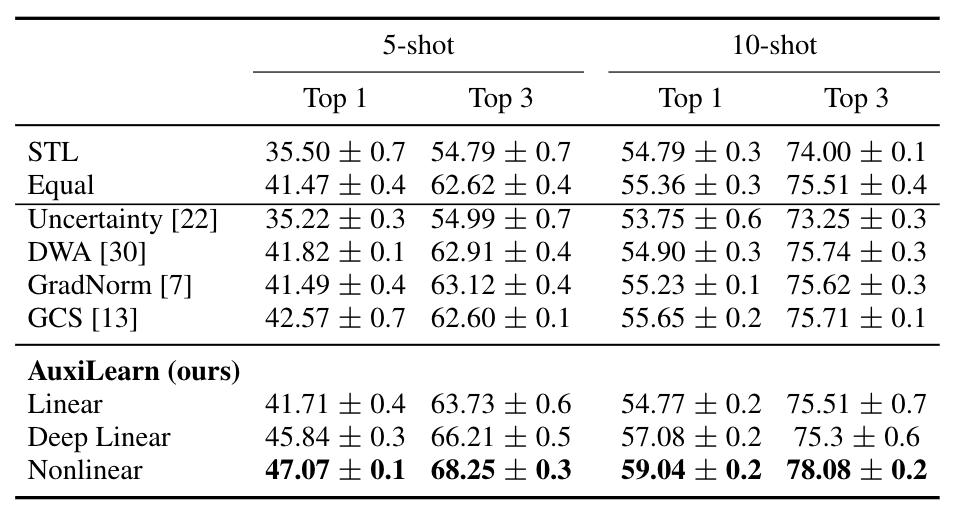

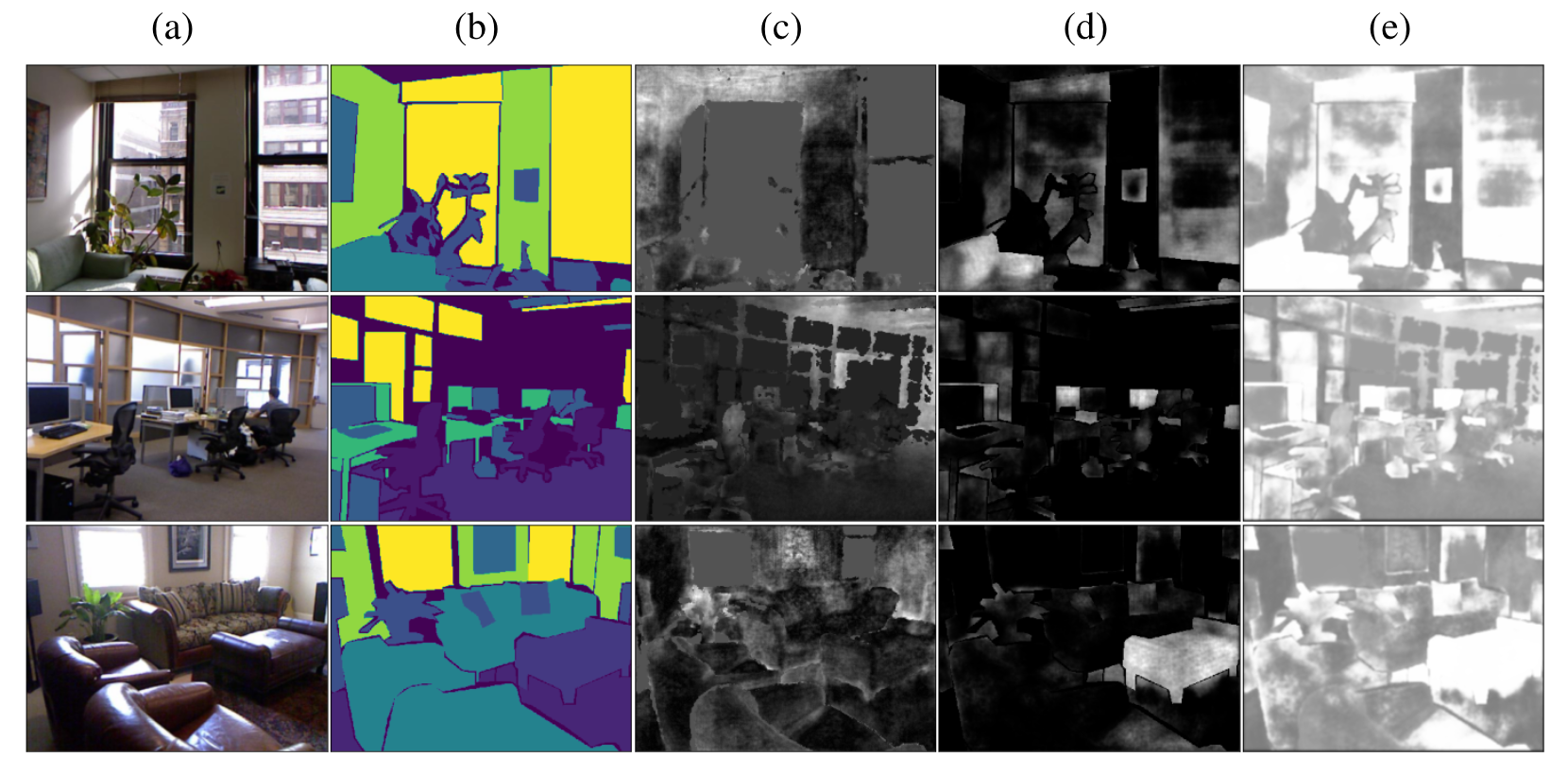

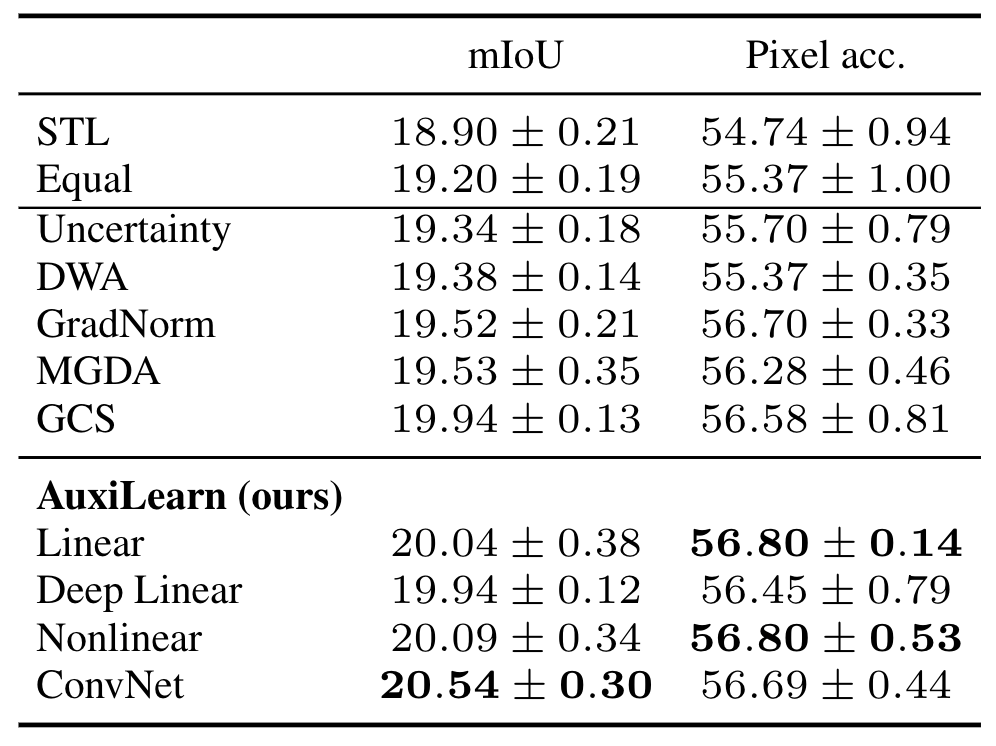

Convolutional loss network for pixel-wise tasks

In certain problems, there exist a spatial relation among losses. For example, consider the tasks of semantic segmentation, depth estimation and surface-normal estimation for images. The common approach is to average the losses over all locations. We can, however, leverage this spatial relation for creating a loss-image, in which each task forms a channel of pixel-losses induced by the task. We can now stack those channels and parametrizegas a CNN that acts on this loss-image. Here we evaluate AuxiLearn on the main task of semantic segmentation on the NYUv2 dataset.

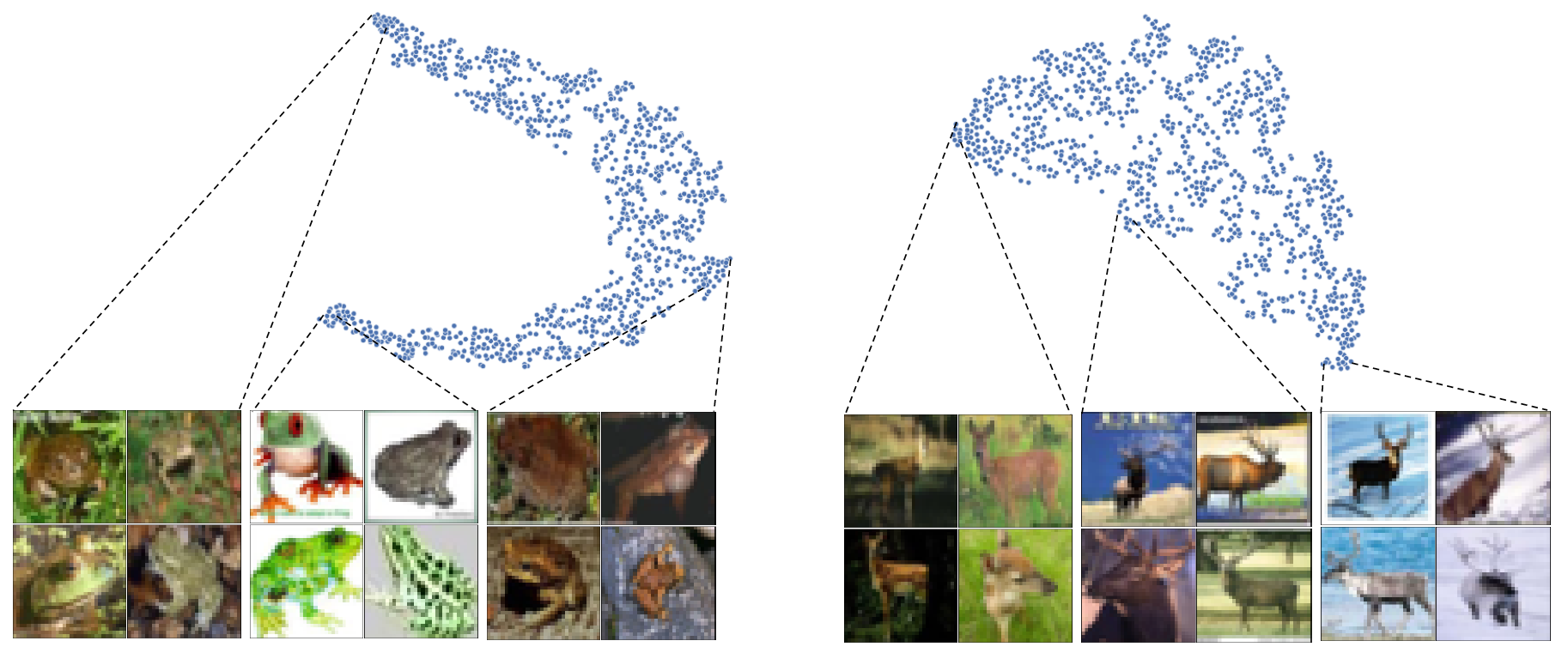

Learning a novel classification auxiliary task

Bibtex

Acknowledgements

This study was funded by a grant to GC from the Israel Science Foundation (ISF 737/2018), and by an equipment grant to GC and Bar-Ilan University from the Israel Science Foundation (ISF 2332/18). IA was funded by a grant from the Israeli innovation authority, through the AVATAR consortium.